Swarm Intelligence - follow these simple rules

It has been shown that the collective action of many simple-governed agents is capable of producing behaviour that is beyond the capabilities of each individually. Mainly, the decentralised coordination between individuals, has been studied in naturally occurring swarms such as ant and bee colonies, flocking of birds and herding of other animals. It can easily be concluded that the overall group behaviour is highly complex and cannot be generated by a single individual.

Typically, when considering swarm intelligence the assumptions are that many, similarly build individuals are employed in the group, the interactions between agents are based on only local information that is exchanged either directly or through the environment (stigmergy) and that the group behaviour is self-organisational based on those interactions.

Recently, a more abstract view has been put on the swarms in an attempt to artificially generate intelligent behaviour of the group. One of the most famous example is the “boids”. It is an simulated life algorithm, developed by Craig Reynolds, that tries to mimic the flocking action of birds. The rules that the agents follow are simple and are based on aligning oneself in the same orientation as the others, flying towards them, but keeping a minimum distance.

The project that is presented is partly based on the ideology of Craig, but is extended in order to mimic another type of animal behaviour - forming a trail or line.

Similar to ants forming a trail using pheromones and tactile senses, the agents can see and navigate to each other.

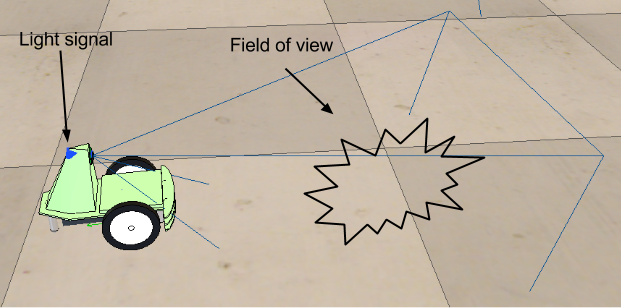

Unlike ants that use pheromones left in the environment as a method of communicating to other ants and where the path or trail is optimised on the concentration, here in a unique way, a more direct approach of transferring information is taken - through light. This inspiration is taken from the fireflies that use the light to communicate and attract each other. A nice example of natural synchrony can be seen on this Ted Talk - Steven Strogatz: How things in nature tend to sync up.

Simulation

Each agent in the simulated world has the following abilities:

- Move around freely and change direction of travel

- Generate a light signal at its own unique frequency

- Sense a light signal

It can be represented as follows in the V-REP 3D robotics simulator by examining a sample robot.

Each individual starts at a completely random location and orientation and selects a unique frequency for its light signal. Afterwards it starts obeying the following rules:

- Move at the direction at which it is facing - the robot cannot stop, but is forced to move where it desires - it will play a role in finding a “partner” or group to follow.

- If it hasn’t flashed its light after a specific time period flash - the unique frequency gives the minimum rate at which the light needs to flash, as it’s crucial and the only mean of finding neighbouring robots.

- If it sees another agent flashing in its field of view, flash and rotate slightly towards them.

The last rule suggests that when a trail is formed and the first robot flashes, all agents within the group will synchronously flash as well. This puts forward the idea that the leader of the subgroup is forming their ability to find other groups by effectively enslaving the light frequencies.

The simulation consists of between 20 and 40 robots as shown above that exist in a “world” and have the task to enforce the above rules. It has been investigated to determine whether such simple set of actions can produce more complex behaviour and form trails of agents.

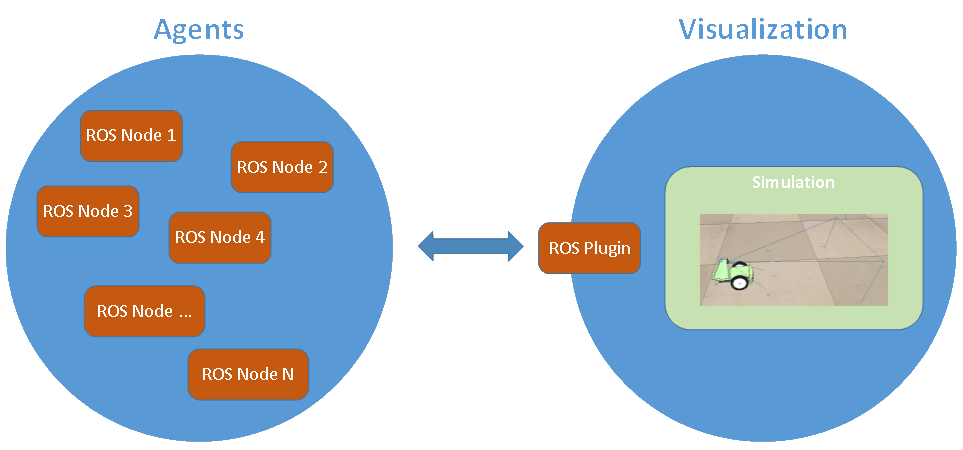

Each agent is run as a separate ROS node in the network and is working with the sense-act loop. The Robotic Operating System (ROS) is a meta operating system that can be viewed as the communication infrastructure for a distributed network. Each node in a network may be simplified to a process and can run across different physical machines. The initial infrastructure contained a plug-in that linked the ROS system to V-REP - a 3D robotics simulator. However, during the experiment it has been found that due to computational and time constraints, the simulation duration exceeded reasonable constraints - adding extra collision avoidance meant grouping occurred after a period of more than several hours. To substitute the simulation, a simplified visualisation program that substituted the V-REP simulation was written using OpenCV, an open source computer vision library. This allowed for faster code execution and assessment of the system.

Overall it has the following architecture:

The code was created from scratch and is available upon request! Please look into the About me page for more details on how to contact me.

The parameters that can be balanced are the following:

- Angle - the viewing angle of the robot.

- Distance - the maximum distance at which the robot can see other lights.

- World size - the overall size of the world.

- Noise - there was random Gaussian noise added to the both location and orientation of each agent.

The following few videos show a combination of different parameters and explain the variety of behaviours that occur.

In the first video, after the initial few minutes of reshuffling and agent aligning, patterns starts to emerge (mark

1:53 in video below, please scroll to other points if needed).

Note: To additionally speed up the cropped out videos, use

YouTube HTML5’s build in speed settings.

In the first video several stages can be evaluated:

- Stage 0: Initial random position

- Stage 1: Groups of 2s and 3s of robots

- Stage 2: The majority of the robots are split into 2 groups

- Stage 3: The two groups merge

- Afterlife: The big group disperses, forming 2 smaller ones and oscillating at the above stages (2 and 3) until the true leader is found that has the highest frequency.

Even though different groups of agents cross their paths, they are not necessarily going to merge. This is due to their different flashing frequencies, meaning they are invisible to each other.

It is interesting to note, that the leader of the groups have been found to converge to the individuals with the highest flash frequency. This suggests that their ability to attract other individuals and be seen in their visual spaces increases.

Simulation 2

Simulation 2 illustrates the behaviour when the range of the sensors is large, but the angle of view is narrow (30 degrees). This allows for the robots to see each other from a distance, forming small groups fast. However, it means that similarly are able to detect robots outside the current sub group, minimizing the chances to create larger trails.

Simulation 3

In this simulation the reverse approach is chosen - short range at a large angle (120 degrees). Similarly, smaller groups quickly form, they become more resistant to noise, but also are harder to pick up new individuals.

Swarm intelligence is allowing for agents that are working on only local environment, to be able to generate actions that are considered globally beneficial for the individuals. As such, they act in such a manner, in which the simple rules that are governing their behaviour, to is creating more value when multiple agents are interacting. The resulting complexity is based exclusively on these rules.

This overall suggests that the construction of many simple robots, that have no centralized communication (thus removing the opportunity for a single point of failure) and are easily programmed, allows for creation of beneficial behaviours emerging from the interaction within the swarm.

Other simulations

For interested people, other simulations with even more parameter changes can be seen here.

References

[1] Jeong, Donghwa, and Kiju Lee. “Dispersion and Line Formation in Artificial Swarm Intelligence.” arXiv preprint arXiv:1407.0014 (2014)

[2] Reynolds, C. W. (1987) “Flocks, Herds, and Schools: A Distributed Behavioral Model”, in Computer Graphics, 21(4) (SIGGRAPH ‘87 Conference Proceedings)

[3] Holland O, Melhuish C., ‘Stigmergy, self-organization, and sorting in collective robotics’, Artificial Life, 1999 Spring,5(2):173-202.

[4] Dorigo, Marco, Vittorio Maniezzo, and Alberto Colorni. “Ant system: optimization by a colony of cooperating agents.” Systems, Man, and Cybernetics, Part B: Cybernetics, IEEE Transactions on 26.1 (1996): 29-41.

[5] Open Computer Vision Library (OpenCV), http://opencv.org

[6] Robot Operating System (ROS), http://www.ros.org

[7] Virtual Robotic Experimentation Platform (V-REP), http://www.coppeliarobotics.com