Overview of the Assessment List for Trustworthy Artificial Intelligence (ALTAI)

Drowned in the noise around the Schrems2 case and the decision of the European Court of Justice (ECJ) to invalidate the Privacy Shield and essentially make it impossible for companies to work both within the US (for now) and EU jurisdiction, was an update of the EU Ethics Guidelines for Trustworthy Artificial Intelligence. The aim is to provide a foundation for the creators and users of the AI systems in order to instill trust in the way they are designed, build and run, in order to make them lawful, ethical and robust.

Initial Guidelines

First introduced in early April 2019 by the High-Level Expert Group on AI, the guidelines outlined what trustworthiness is around 3 main pillars:

-

LAWFUL - respecting all applicable laws and regulations

-

ETHICAL - respecting ethical principles and values

-

ROBUST - both from a technical perspective while taking into account its social environment.

This was a good start, but left a lot to be desired, as any practitioner would highlight that these statements are as all encompassing, as vague. And not only really hard to act upon, but also quantify and assess.

You can read the full release here.

Another relevant material for the basis here is the European Commission white paper on AI or the EU strategy on maintaining AI excellence.

2020 Update

The current update on these guidelines (July 2020) aims to provide a bit more clarity and actionable insight on how the previous papers can be used within the development of these AI systems. These are provided as a final assessment list for trustworthy AI. This time the ethics guidelines highlight 7 key requirements that are meant to support the European Convention on Human Rights:

- Human Agency and Oversight,

- Technical Robustness and Safety,

- Privacy and Data Governance,

- Transparency,

- Diversity, Non-Discrimination and Fairness,

- Environmental and Societal Well-Being,

- Accountability.

The concept behind ALTAI is to transition from the murky suggestions on what is the ideal strategy, into something actionable - in this case, or any engineering endeavor - a list. You can jump between the different concepts and implications for the AI researchers, designers and expert from the links above.

To begin with, the project is placed on a new website - https://altai.insight-centre.org/ and allows for users to register and use the checklist provided. The questions (which are not final and the ALTAI are happy to receive any feedback) are aimed to reduce their overlap, to target a specific issue from above and have a clear answer.

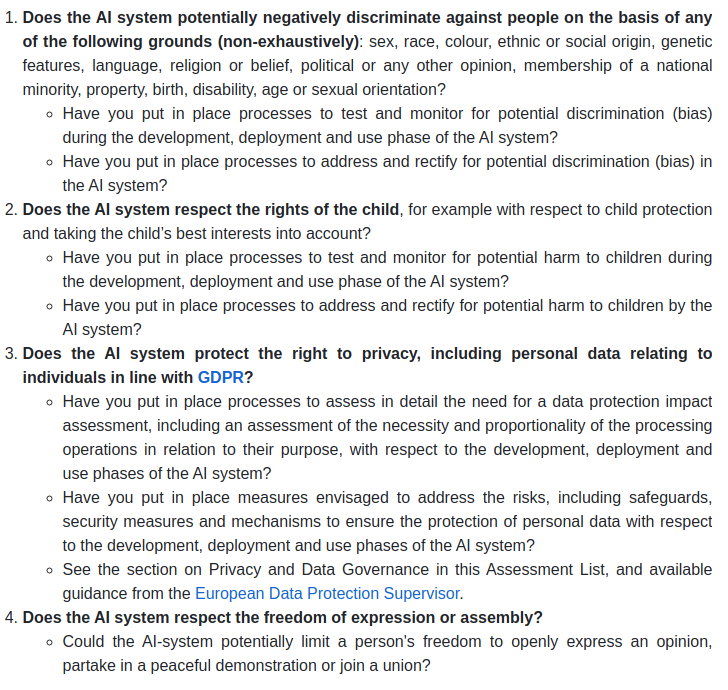

Before we jump in to the actual questions, its worthwhile to discuss the main relevant sections of the European Convention on Human Rights (ECHR) and the European Social Charter which discuss issues for instance - the human right for dignity, privacy, non-discrimination. And any AI system should not impair in any way any of those rights for people within the EU. ALTAI raises the following 4 questions for the developers as a fundamentals rights impact assessment:

This all aims to establish that the sheer existence of this AI system won’t be detrimental to society at large or for particular subgroups. Another interesting aspect is that it mentions systems that need to be created, and continuously monitored through deployment for these points - most AI models are not static and evolve with time and new data.

However, what is left unmentioned between the lines are questions regarding the latency, absolute performance or mean time between acceptable failures, as well as the long term consistency of the algirhtms. The latter part is of importance as the process for developing of the AI systems is associated with data collection, which in turn, may be a result of another AI system, propagating the historic view of the world.